Solving PAM in hybrid environment using multi-hop sessions with HCP Boundary

Background

- Is your organisation using HCP Boundary as a PAM (privileged access management) solution for hosts residing in private network across multiple cloud providers?

- Does your organisation has strict networking constraints like resources residing in private network having only outbound access?

- Does your organisation require to use your own Boundary workers instead of HCP workers?

In this article, I am going to highlight and demo the solution using multi-hop sessions with HCP Boundary and how you can access resources in private networks across Azure and AWS.

What we’ll be seeing in this article/demo?

- Working with self managed PKI ingress workers (Installation & Registration)

- Creating worker-aware targets

- Connecting to targets using Boundary Desktop Application

- Challenges with ingress workers

- Introducing multi-hop sessions with HCP Boundary

- Working demo of multi-hop sessions

Working with self managed workers

Self-managed workers allow Boundary users to securely connect to private resources without exposing their organizations' networks to the public. Self-managed workers use public key infrastructure (PKI) for authentication. PKI workers authenticate to Boundary using a certificate-based method

Networking requirements

- Outbound access to HCP Boundary Control Plane (worker -> HCP control plane)

- Outbound access to the private target (worker -> host)

- Inbound access from user trying to establish the session (user -> worker on port 9202)

Installation of Ingress worker

Assuming you have a running HCP Boundary cluster, follow below steps:

- Create Azure VM and AWS EC2 linux machines with just private IP (no public IPs associated).

- Create Azure and AWS Ingress workers in the respective cloud providers using below steps:

- Install boundary-worker utility on both workers

mkdir /home/japneet/boundary/ && cd /home/japneet/boundary/

curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add

sudo apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

sudo apt-get update && sudo apt-get install boundary-worker-hcp -y-

- Add pki-worker.hcl on both workers at path /home/username/boundary. Replace value of hcp_boundary_cluster_id with actual value.

disable_mlock = true

hcp_boundary_cluster_id = "<hcp-boundary-cluster-id>"

listener "tcp" {

address = "0.0.0.0:9202"

purpose = "proxy"

}

worker {

# replace IP with the public IP address of ingress/upstream worker

public_addr = "20.104.108.10"

auth_storage_path = "/home/japneet/boundary/worker1"

tags {

# for AWS, replace tag name with aws-ingress

type = ["azure-ingress"]

}

}

- Run boundary worker using above config file.

./boundary-worker server -config="/home/japneet/boundary/pki-worker.hcl"

- Copy the Worker Auth Registration Request field in the output of above command which will be used next for registering this worker to HCP Boundary control plane.

Registration of Ingress Worker

- Go to HCP Boundary cluster URL and authenticate with admin credentials.

- Once logged in, navigate to the Workers page.

- Click New and scroll down to the bottom of the New PKI Worker page and paste the Worker Auth Registration Request key you copied earlier.

- Click Register Worker.

- Follow the above steps for next Ingress worker.

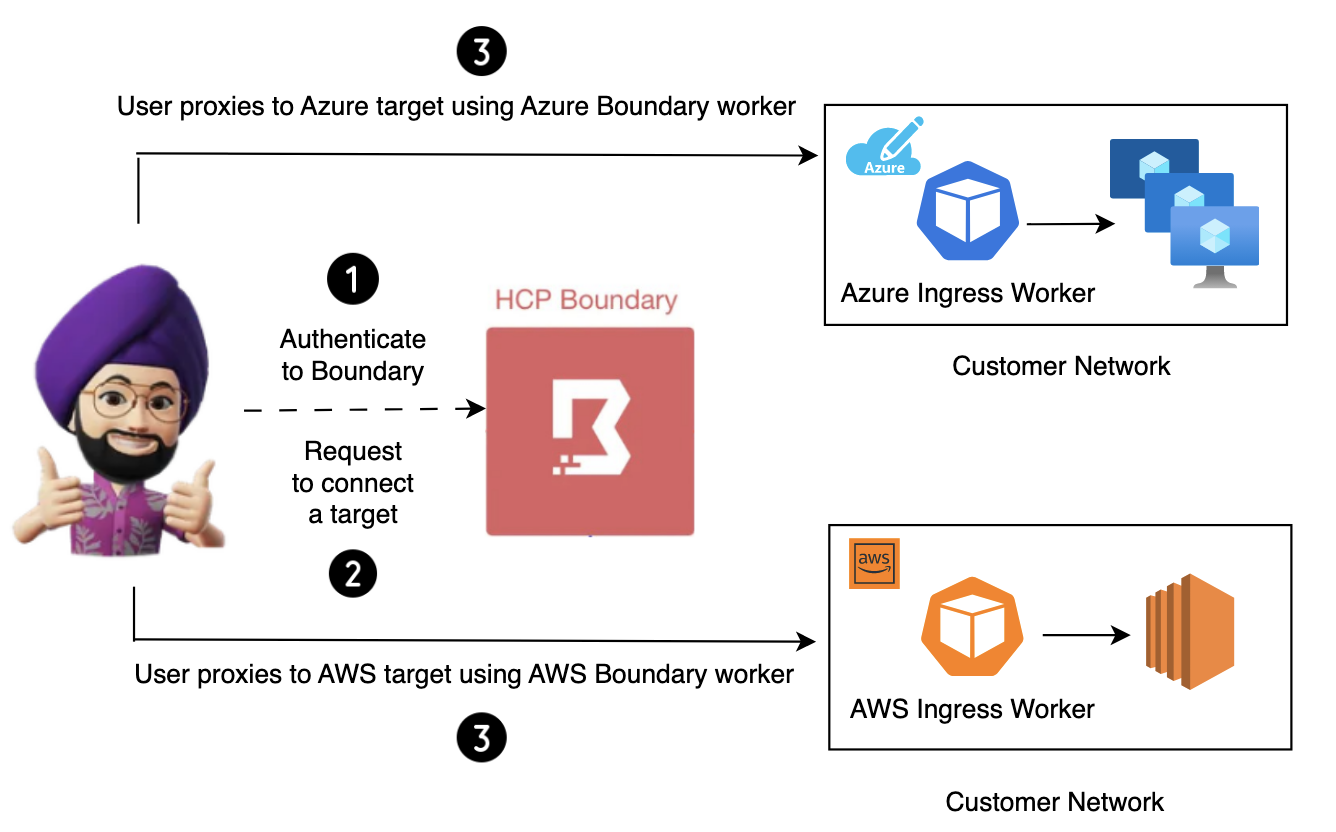

Awesome ! You now have two self-managed PKI Ingress workers (one for Azure and one for AWS) registered in HCP Boundary cluster. But how do you tell which ingress worker to use while connecting Azure or AWS target? Let’s see this now.

Creating worker-aware targets

- Open the HCP Boundary Admin Console UI and create a organisation called “multi-hop” followed by a project called “multi-hop”.

- Navigate to the targets page within the multi-hop project.

- Create 2 targets (one for Azure and one for AWS) with following details:

- Target name (aws-target and azure-target)

- Target address (private IP of AWS EC2 and Azure VM respectively)

- Default port 22

- Toggle the Ingress worker filter switch to add an ingress worker filter that searches for workers that have the following tag:

# For azure-target

"azure-ingress" in "/tags/type"

# For aws-target

"aws-ingress" in "/tags/type"

Connecting to targets using Boundary Desktop Application

With the ingress filters assigned above, any connections to target will be forced to proxy through the assigned ingress worker.

- The end user authenticates to HCP Boundary cluster using Boundary Desktop application.

- The user navigates to Targets section and will the authorized targets based on his roles and permissions.

- The user connects to the aws/azure target using “Connect” button.

- A proxy URL is published which user uses to establish a session

ssh username@127.0.0.1 -p <port mentioned in proxy url>

Challenges with Ingress workers

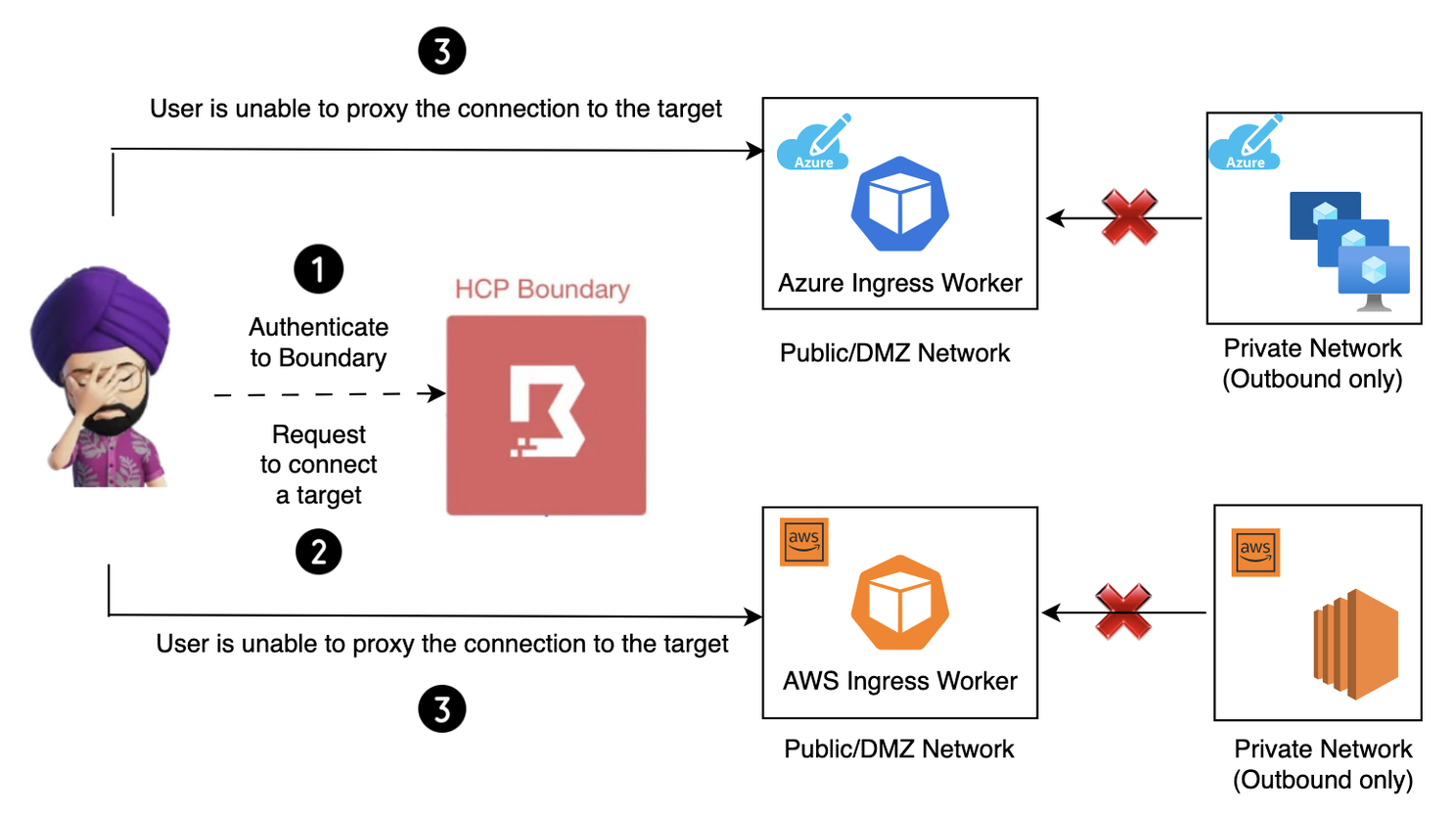

- The Boundary ingress workers have to be accessed by user/clients which means either those users need to have some VPN access or the ingress worker is publicly accessible using a public IP. Some organisations may not allow this.

- Other organisations might give access to boundary ingress workers residing in public network but resources are in private network with only outbound access, hence boundary ingress worker won’t be able to proxy the connection.

Managing multi-hop sessions with HCP Boundary

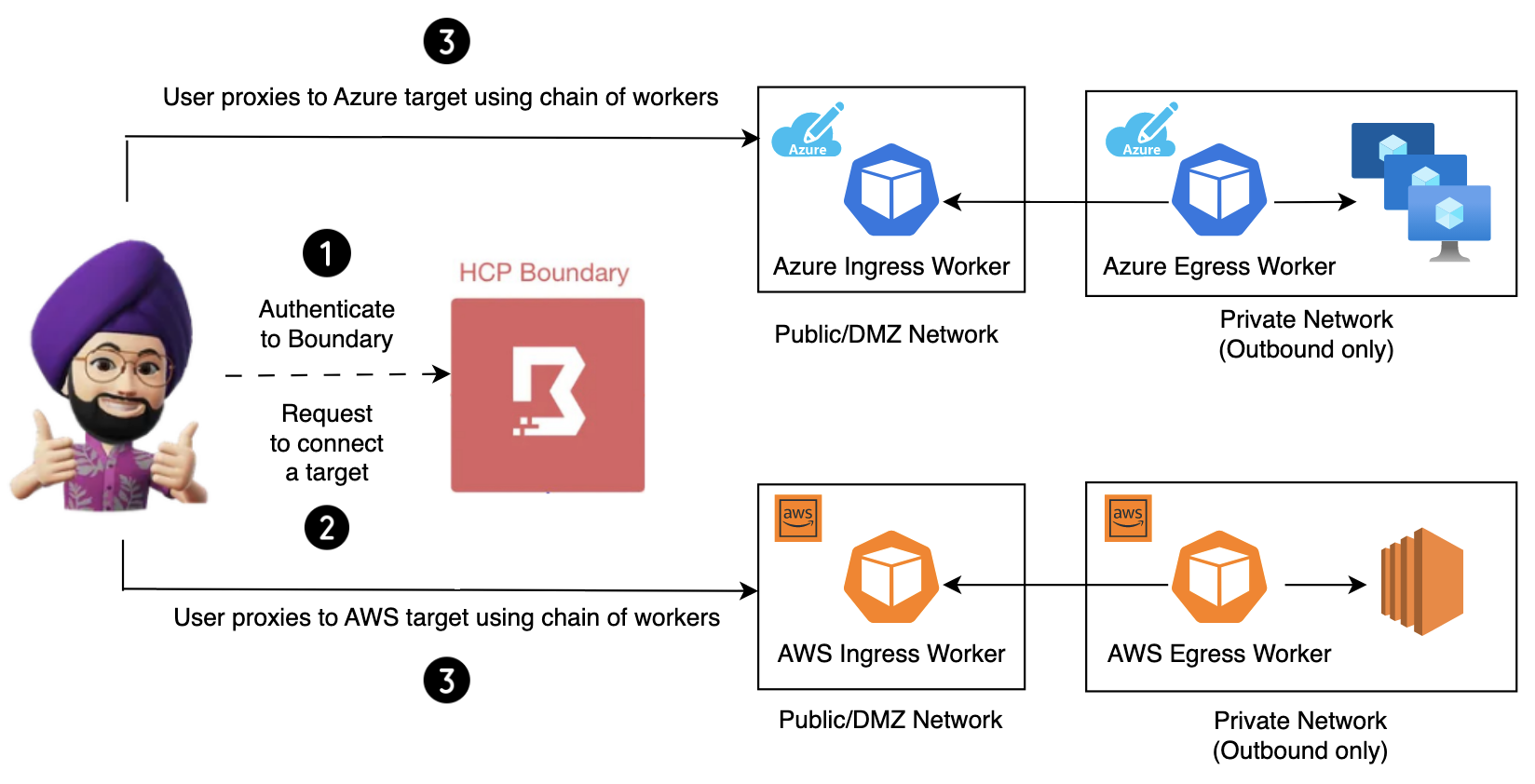

In order to mitigate the above networking challenges with some organisations, 0.12 release of HCP Boundary introduced a feature of multi-hop sessions wherein now you can configure different types of Boundary workers based on their networking requirements and eventually create a chain of workers through reverse-proxy connections. This creates multiple “hops” in the chain from a worker to a controller.

- The new type of worker which is introduced as part of this feature is called an “Egress” worker.

- This worker has network access into the hosts and connects to an existing upstream/ingress worker which is accessible to the clients.

- The “Egress” worker can reside in a private network with only outbound access and inaccessible to the clients.

Installation of Egress Worker

- Create 2 Egress workers (one in each cloud) with private IP’s and only outbound access.

- Install same boundary-worker utility similar to Ingress worker.

- Add pki-worker.hcl on both workers at path /home/username/boundary. Note initial_upstreams parameter which tells which ingress worker to connect to.

disable_mlock = true

listener "tcp" {

address = "0.0.0.0:9202"

purpose = "proxy"

}

worker {

# Use initial_upstreams instead of public_addr

initial_upstreams = ["<private IP of ingress worker>:9202"]

auth_storage_path = "/home/japneet/boundary/worker2"

tags {

# for AWS, replace tag name with aws-egress

type = ["azure-egress"]

}

}

- Run boundary worker using above config file.

./boundary-worker server -config="/home/japneet/boundary/pki-worker.hcl"

- Copy the Worker Auth Registration Request field in the output of above command which will be used next for registering this worker to HCP Boundary control plane.

Registration of Egress Worker

Follow same steps as ingress worker for registering the egress worker.

Modifying worker-aware targets

- Open the Boundary Admin Console UI navigate to the targets page within the “multi-hop” org.

- Click on the aws-target/azure-target. Scroll to the bottom the the target details page and click Edit Form.

- Toggle the Egress worker filter switch to add an egress worker filter that searches for workers that have the following tag:

# For azure-target

"azure-egress" in "/tags/type"

# For aws-target

"aws-egress" in "/tags/type"

- Refresh the targets in Boundary Desktop Application and you should be able to connect the target again.

Working Demo

Conclusion

Awesome ! You have now connected to a target residing in a private network with only outbound access by creating a chain of “ingress” and “egress” workers and establishing a “hop” in the session.